Europe has just released the Artificial Intelligence Act, a proposal to regulate uses of Artificial Intelligence (AI) to adequately address benefits and risks of this technology at Union level. This regulation aims to create a safe space for AI innovation complying with high level of protection of public interest, safety and fundamental rights and freedoms while creating the conditions for an ecosystem of trust which will foster uptake of AI services.

The proposal follows a risk-based approach, meaning that certain obligations and restrictions will apply upon the risk level stemming from use of the AI. This follows recommendations from the Ethics Guidelines for Trustworthy Artificial Intelligence, The EU White Paper on AI, and input provided by Telefonica and many other stakeholders to European public consultations on AI.

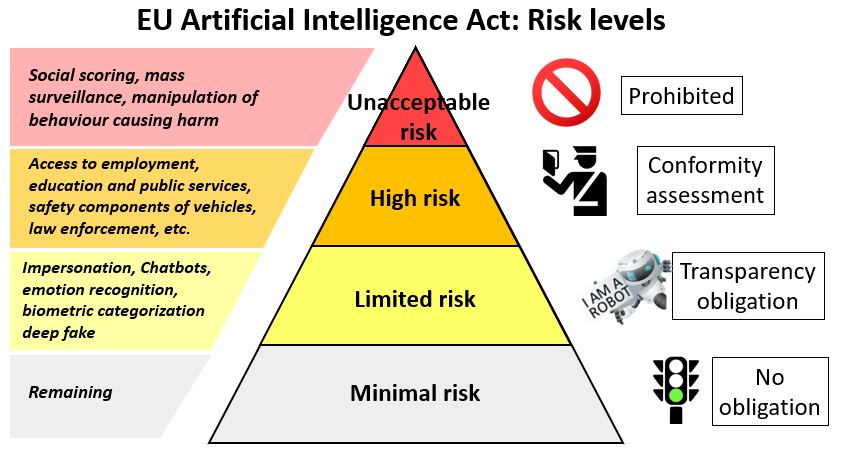

The regulation explicitly identifies four levels of risk. The top level comprises uses presenting an unacceptable risk to the safety, livelihoods, and rights of people. This use cases are prohibited unless authorised by law for national security purposes and include social scoring AI systems, manipulation of human behaviour causing harm, and mass surveillance.

(Source: Telefónica)

The second level, related to high-risk uses, will be subject to a conformity assessment before they can be deployed in the market. The conformity assessment looks at the quality of the data sets to minimise risks and discriminatory outcomes, documentation and record keeping for traceability, transparency, and provision of information to users, human oversight, robustness, accuracy and cybersecurity provisions. The EU has defined a list of uses of AI that would be considered high risk, such as: access to employment, education and public services, management of critical infrastructures, safety components of vehicles, law enforcement and administration of justice, etc.

The third level is devoted to limited risk uses and it will only have transparency obligations. For example, in the case of AI based chatbots, users should be aware that they are interacting with a machine.

Finally, the fourth level includes minimal risk uses that will not be subject to any obligations tough the adoption of voluntary codes of conduct is recommended. This could enhance trust for adoption of AI and provide a lever for service differentiation, and thus a competitive advantage among service providers.

Overall, the proposed regulation seems fit for the purpose of providing a trusted environment for Europe to lead the adoption and use of innovative AI systems where rights and freedoms are protected according to EU’s values.

The AI Act opens supervision and conformity assessment -not in all cases- to third parties, which shall have access to data used to train AI systems and even the source code or algorithms. This represents a change of paradigm as these bodies will analyse personal and confidential data, trade secrets, and source code protected by intellectual property rights. These far-reaching supervisory powers will have to come matched with at par responsibility and the adoption of proportionated and minimisation principles for interventions. On global terms, the need for proportionated obligations is an area of concern, which could be addressed through a more granular identification of high risks uses. This would avoid labelling as high risk not so problematic usages, preventing placing on them unproportionate burden with no real effect in enhancing trust and resulting in increased development costs and time to market. Besides, this could put European developers at competitive disadvantage vs. others specially when targeting non-European markets

The regulation will be applicable to all uses of AI affecting EU citizens, no matter where the service provider is based, or where the system developed or being run, within or outside EU boundaries. This is also the case of other EU regulations, such as the General Data Protection Regulation (GDPR), and other legislative proposals, such as the DMA (Digital Markets Act) and the DSA (Digital Services Act).

Despite the difference in substance, the GDPR could provide us with some hints on issues that should be addressed right on. A judicial decision has annulled the EU-US agreement that provided legal certainty for GDPR compliance of a framework for personal data transfers between the regions. Additionally, a nonbinding consulting body, the European Data Protection Board, has raised concerns on alternative data transfer mechanisms while providing additional recommendations that if adopted could further inhibit data transfers. This has placed companies in a legally complex situation until they adapt data transfers to these new developments, while increasing uncertainty on potential additional requirements for international data transfers to be GDPR compliant.

As European Artificial Intelligence Act would be applicable, for example, to AI services offered from third countries to EU citizens, how this regulation is to be enforced, conformity assessment and supervision implemented for such cases is extremely relevant. In fact, this is what the US-EU GDPR case highlights: how relevant is to clarify and ease up the extraterritorial application of EU Regulations. Full cooperation between EU and third countries is needed to secure the compatibility of regulatory outcomes, enabling flexible frameworks to assure regulatory compliance and conformity assessments that result in legal certainty with the least burdensome possible procedures.

For the EU to succeed and lead in the development, adoption and usage of a European values based Artificial Intelligence a smooth application of the Artificial Intelligence Act with all legal guarantees for affected parties outside EU boundaries should be a top priority.

Now, the proposal will be presented to the European Council and Parliament to be approved under the ordinary European legislative procedure. While the process will unlike be completed before 2023, EU should foster a speedy process so that AI Act a enters into force in a timely manner for massive adoption of AI that is fully aligned with EU values respecting fundamental rights and freedoms.