The Institute for Digital Development in Latin America and the Caribbean has organized a master class (#CharlasIDD) with Gonzalo López-Barajas, Head of Public Policy and Internet at Telefónica, to analyse the new European law that will govern the uses of Artificial Intelligence (AI).

Where does the initiative come from?

The European Union started talking about options for regulating Artificial Intelligence at the end of 2018. That was when it first presented an AI strategy and agreed on a coordinated plan with Member states. A year later, the European Commission set among its priorities for 2019-2024 to make Europe fit for the digital age, including the debate on humane and ethical artificial intelligence.

In 2019, the Commission published the Ethical Guidelines for Trusted Artificial Intelligence and in 2020 the White Paper on Artificial Intelligence was published, an AI industrial strategy for Europe to embrace and lead the development of this technology, while proposing a legislative framework to boost trust in AI while respecting fundamental rights and ethics in Europe.

The high point of AI regulation comes in March 2021 with the publication of the proposal for an Artificial Intelligence Regulation, a proposal to regulate the uses of AI in order to adequately address the benefits and risks of its use.

This move is pioneering the development of a regulatory framework for AI on a global scale. As Margarethe Vestager, Vice-President of the European Commission explained: “We adopt a landmark proposal of this Commission: our first legal framework for Artificial Intelligence…. Today we aim to make Europe a world leader in the development of safe, reliable and human-centric Artificial Intelligence and its use”.

Telefónica has actively participated in all the consultation processes that have accompanied these regulatory developments, from the contribution to the ethical guidelines, to the White Paper, among other proposals.

Definition of IA, scope of application and categories

In order to understand the regulatory proposal, it is essential to understand what is meant by AI in order to know what we are regulating and what its scope is.

AI is software that is developed using one or more of the following techniques: machine/deep learning strategies, logic and knowledge, statistics/Bayesian. This use can generate output information -such as content, predictions, recommendations or decisions- for a given set of human-defined goals and influence the environments with which it interacts.

This explanation offers a very broad approach to defining a technology that will be decisive for the development of the European Union. It would therefore be necessary to provide a more precise definition so that systems are not regulated for which their adoption would be a decisive barrier and we would not benefit from their introduction into the regulatory scope.

However, a very significant advantage of the proposal is its scope of application, which follows in the wake of other previous regulatory developments, such as the General Data Protection Regulation, or those in progress -such as the Digital Services Act and the Digital Markets Act-. Thus, the new proposal will affect all actors offering AI systems that impact individuals located in the European Union, regardless of whether these actors are established within its borders or not.

“Europe has clear rules to provide legal certainty and consumer protection when it comes to goods and services. Today we are the first jurisdiction in the world to propose a comprehensive legal framework to do the same for AI,” Thierry Breton.

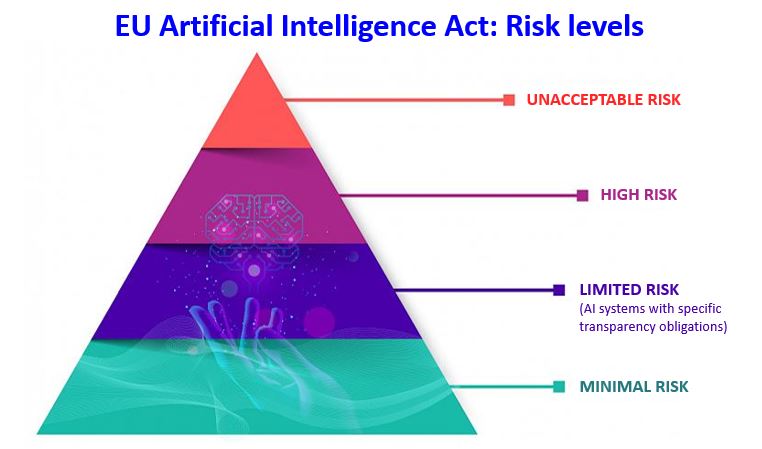

In terms of the regulatory approach, we are dealing with a risk-based proposal, i.e. depending on the impact that the use of the AI system may have on people, we will find different obligations classified into four main levels:

- Unacceptable risk, which would include practices such as: social scoring, mass surveillance, manipulation of behaviour causing harm, etc. These uses would be prohibited except those authorised by law for national security reasons.

- High risk, which would include: access to employment, education and public services, vehicle safety components, law enforcement, etc. In this case a conformity assessment (in-house or by a third party) would be required.

- Limited risk, encompasses practices such as impersonation, chatbots, emotion recognition, deep fakes, biometric categorisations, etc. For this category there is a transparency obligation, so it will be necessary to report that you are interacting with an AI system (unless it is obvious) and to label “deep fakes”.

- Minimal risk, for all other uses. They should encourage the adoption of codes of conduct and voluntary codes, including transparency obligations.

Telefónica’s position

For the EU to succeed and lead the development, adoption and use of Artificial Intelligence based on European values, a smooth implementation of the Artificial Intelligence Act must be a priority.

Telefónica has been an active player in the Artificial Intelligence (AI) ecosystem for a number of years. We incorporate this technology in a wide range of services and activities: from our Big Data business unit LUCA, to Aura (our AI-based virtual assistant) or in improving the efficiency of internal processes.

Aware of the potential impact of AI on the economy, people or societies, we have been pioneers in addressing its complexity in our 2018 Manifesto for a New Digital Deal and in the recent Digital Deal to build back better our societies and economies (2020), where we highlighted the ethical use of technology as a fundamental pillar of digital trust and its implications for democratic systems.

In order to maximise the potential benefits of AI by generating trust in its use and adoption by our customers and minimising risks, Telefónica has led the private sector in defining ethical principles for AI to be applied in the company. These principles are applicable not only to the design, development and use of products and services by company employees, but also by suppliers and third parties.

These initiatives are in line with the European Union’s proposal and Telefónica’s commitment to the ethical, safe and reliable use of technology.