Artificial Intelligence (AI) is a game-changing technology for any industry and society.

The benefits of AI cut across society and countries. This technology can improve the well-being of the population, advance environmental sustainability goals, speed up humanitarian action and help preserve cultural heritage. It can also help to optimise the healthcare system, facilitating the detection of diseases and promoting tailor-made solutions for each student or employee, favouring inclusion and adaptation to the characteristics of the labour market.

Its use in business is already transforming industrial sectors, enabling new business models, changing the way we research and innovate, and redefining new capabilities and ways of working. AI enables:

- make quick decisions based on data,

- optimising manufacturing and management processes

- while minimising operational costs and generating efficiencies.

However, its design and use is not without risk. The biggest challenge we face today is to design a governance model for AI that can harness its full potential while protecting human rights, democracies and the rule of law.

Artificial Intelligence and Generative AI

Position paper 2024

Artificial Intelligence

Innovation, ethics and regulation.

The scope of Artificial Intelligence is not limited to national borders and therefore requires global solutions and approaches. It is therefore time to promote convergence at regional and global levels, including the identification of ethical principles.

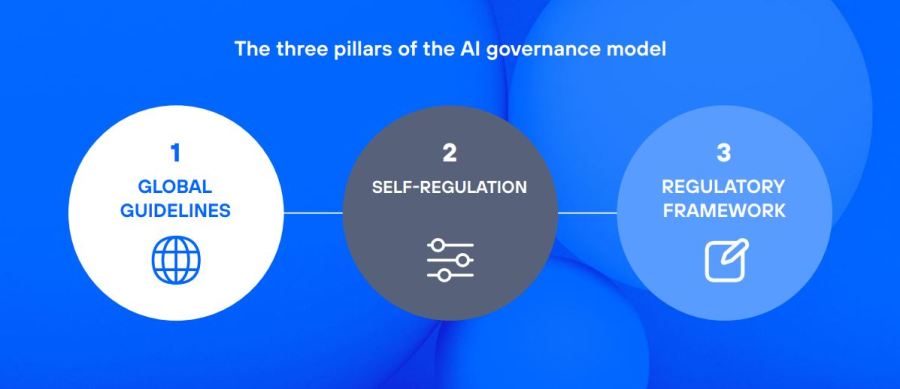

The approach requires a holistic vision with a balanced and coherent governance model based on three pillars: global guidelines, self-regulation and risk-based regulation.

All this with the dual objective of mitigating risks, building citizens’ trust and ensuring their health, safety and rights, while promoting innovation and the adoption of technology.

Governance model: from ethical principles to their implementation

Telefónica has approved ethical principles for AI that apply throughout the company. These principles apply from the design and development stage, including the use of products and services by company employees, as well as suppliers and third parties.

Related document

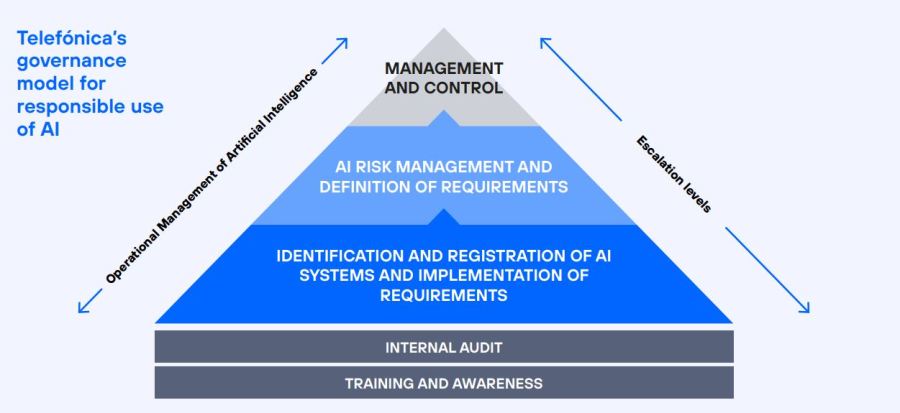

The application of these principles is based on a “Responsibility by Design” approach, which allows us to incorporate ethical and sustainable criteria throughout the value chain. It is a governance model based on three levels: