Artificial Intelligence will have far reaching impact on our societies. There are numerous positive opportunities[1],[2],[3], but there are also risks[4],[5],[6]. In response to those risks, several “AI-active” companies have published their AI principles to ensure that their AI-related activities remain on the “good” side. Google, IBM, Microsoft, Deutsche Telekom and Telefonica are among those.

It is, however, one thing to publish a declaration of how AI will be created and used in an organization. It is another thing to implement that across the organization, especially in case of large multinational entities.

There is yet little experience in making this happen, but there are several resources to get started, notably the IEEE Ethics Certification Program for Autonomous and Intelligent Systems, and solution providers such as Accenture and IBM are developing consultancy offerings to help organizations implement Responsible AI.

In this post, we will explain how is Telefonica approaching the topic. Summarizing, the AI Principles of Telefonica are:

- Fair AI means that the use of AI should not lead to discriminatory impacts on people in relation to race, ethnic origin, religion, gender, sexual orientation, disability or any other personal condition. When optimizing a machine learning algorithm for accuracy in terms of false positives and negatives, the impact of the algorithm in the specific domain should be considered.

- Transparent and Explainable AI means to be explicit about the kind of personal and/or non-personal data the AI systems uses as well as about the purpose the data is used for. When people directly interact with an AI system, it should be transparent to the users that this is the case. When AI systems take, or support, decisions, a level of understanding of how the conclusions are arrived at needs to be ensured adequate to the specific application area (it is not the same for advertising and medical diagnosis).

- Human-centric AI means that AI should be at the service of society and generate tangible benefits for people. AI systems should always stay under human control and be driven by value-based considerations. AI used in products and services should in no way lead to a negative impact on human rights or the achievement of the UN’s Sustainable Development Goals.

- Privacy and Security by Design means that when creating AI systems, which are fuelled by data, privacy and security aspects are an inherent part of the system’s lifecycle.

- The principles are by extension also applicable when working with partners and third parties.

Because large uptake of AI in organizations is still a recent phenomenon, we do currently not advocate a “control by committee” approach to check for all principles, as this would stifle progress in an area where speed is essential. Rather, we believe that we need to make all concerned professionals aware of the issues, and capacitate them to resolve most of issues locally within their area. In specific cases, we might need escalation, but we believe that the important step now is dissemination, awareness raising and training.

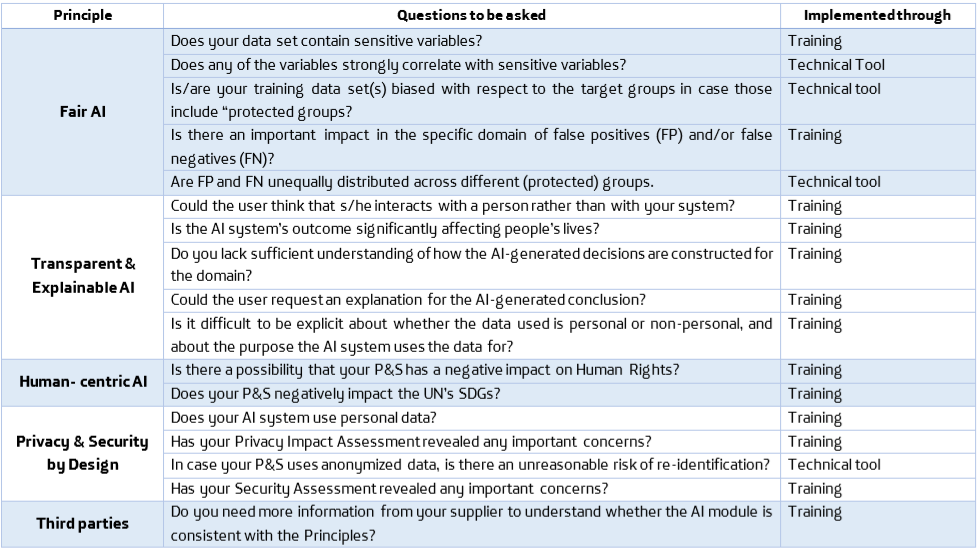

Our approach is to decompose each Principle into a set of questions, and to incorporate those questions into our standard product & services design methodologies. We thus need to capacitate our professionals to be able to answer those questions, and only in case of doubts, provide an escalation mechanism. The table below shows the questions for each Principle and whether this is implemented through training or technical tools.

Given the early state of the art in this field, technical tool support is still limited and, therefore, for answering the majority of the questions training is the only feasible option. Some questions require specific training related to Machine Learning and AI, while other questions require more general training, for instance, to estimate the societal impact of the application. Currently available tools are mostly related to checking for unwanted bias in datasets, which might lead to unfair discrimination, and for finding “hidden” correlations between sensitive variables (ethnical race, religion, etc.) and “normal” variables (postal code, education level). There are only a few tools available, some open source (IBM, Pymetrics, Aequitas) and others proprietary (Accenture).

With the purpose of advancing the state of the art, Telefonica has organized a Challenge for the responsible use of AI. The objective is 1) To find out whether the concerns are limited to a few highly visible cases, or whether they are potentially happening on a much larger scale, and 2) To develop tools and/or algorithms that help detect and mitigate the concerns. The challenge is open from Nov 13 to Dec 15, 2018, and winners will be notified before 31 Dec.

A complete methodology for “Responsible AI by Design” would at least need to include:

- AI Principles, that state the values, the commitments and the boundaries

- Questions to ask (check list) as illustrated in the table

- Awareness & training, to ensure that all AI-related professionals sufficiently understand the problems at stake, and to capacitate them to answer the questions adequately

- Technical tools, that allow to automatically check certain characteristics of the data sets and the algorithms

- Governance, that defines who is responsible for what and sets out the escalation process

At this early stage, we are in favour of a light form of governance so that our professionals have the ability to learn, understand and fully grasp the potential impact of AI while working with it. This promotes responsibility, and is more motivational than imposing limits through controlling committees. If at some later stage, however, AI regulation comes in place, the organization will be well-prepared because the knowledge and habits already are incorporated in the way of working.

[1] PWC (2018). Sizing the prize: what’s the real value of AI for your business and how can you capitalise? Available at: https://www.pwc.com/gx/en/issues/analytics/assets/pwc-ai-analysis-sizing-the-prize-report.pdf

[2] ITU (2018). AI for Good. Global Summit: Accelerating progress towards the SDGs. Available at: https://www.itu.int/en/ITU-T/AI/2018/Pages/default.aspx

[3] Telefonica (2017). Big Data for social good: giving back the value of data. Available at: https://luca-d3.com/big-data-social-good/index.html

[4] Angwin, Julia; Larson, Jeff; Mattu, Surya; Kirchner, Lauren (2016). Machine Bias. Available at: https://www.propublica.org/article/machine-bias-risk-assessments-in-criminal-sentencing

[5] BBC (2018). Amazon scrapped ‘sexist AI’ tool. Available at: https://www.bbc.com/news/technology-45809919

[6] Moore, John (2016). Police mass face recognition in the US will net innocent people. Available at: https://www.newscientist.com/article/2109887-police-mass-face-recognition-in-the-us-will-net-innocent-people/